Why Your Traditional Antivirus Is Already Dead: The AI Malware Detection Revolution That's Saving Enterprises in 2025

Discover why 87% of companies abandoned traditional antivirus for AI malware detection in 2025. See the shocking accuracy rates, implementation costs, and ROI data that's revolutionizing cybersecurity. Get the complete technical guide.

The cybersecurity landscape has fundamentally shifted in 2025. Traditional signature-based antivirus solutions are failing against modern threats, while AI-powered malware detection has emerged as the most effective defense mechanism. With 60% of IT professionals globally identifying AI-enhanced malware attacks as their primary concern, understanding and implementing intelligent detection systems has become critical for organizational security.

The Current Threat Reality

The numbers paint a stark picture: 340 million malware victims are recorded annually, with 87% of global organizations having already faced AI-powered cyber attacks. Modern malware has evolved far beyond simple signature-based detection capabilities.

Understanding Modern Malware Intelligence

Today's malware operates with sophisticated AI capabilities that enable:

- Dynamic code modification during runtime to evade detection

- Adaptive learning from failed attack attempts

- Polymorphic generation creating thousands of variants from single payloads

- Behavioral mimicry of legitimate software processes

Recent analysis reveals malware specimens that rewrite their code structures up to 47 times during a single infection cycle, while generating over 12,000 unique variants from their base payload architecture.

Technical Architecture of AI-Powered Detection

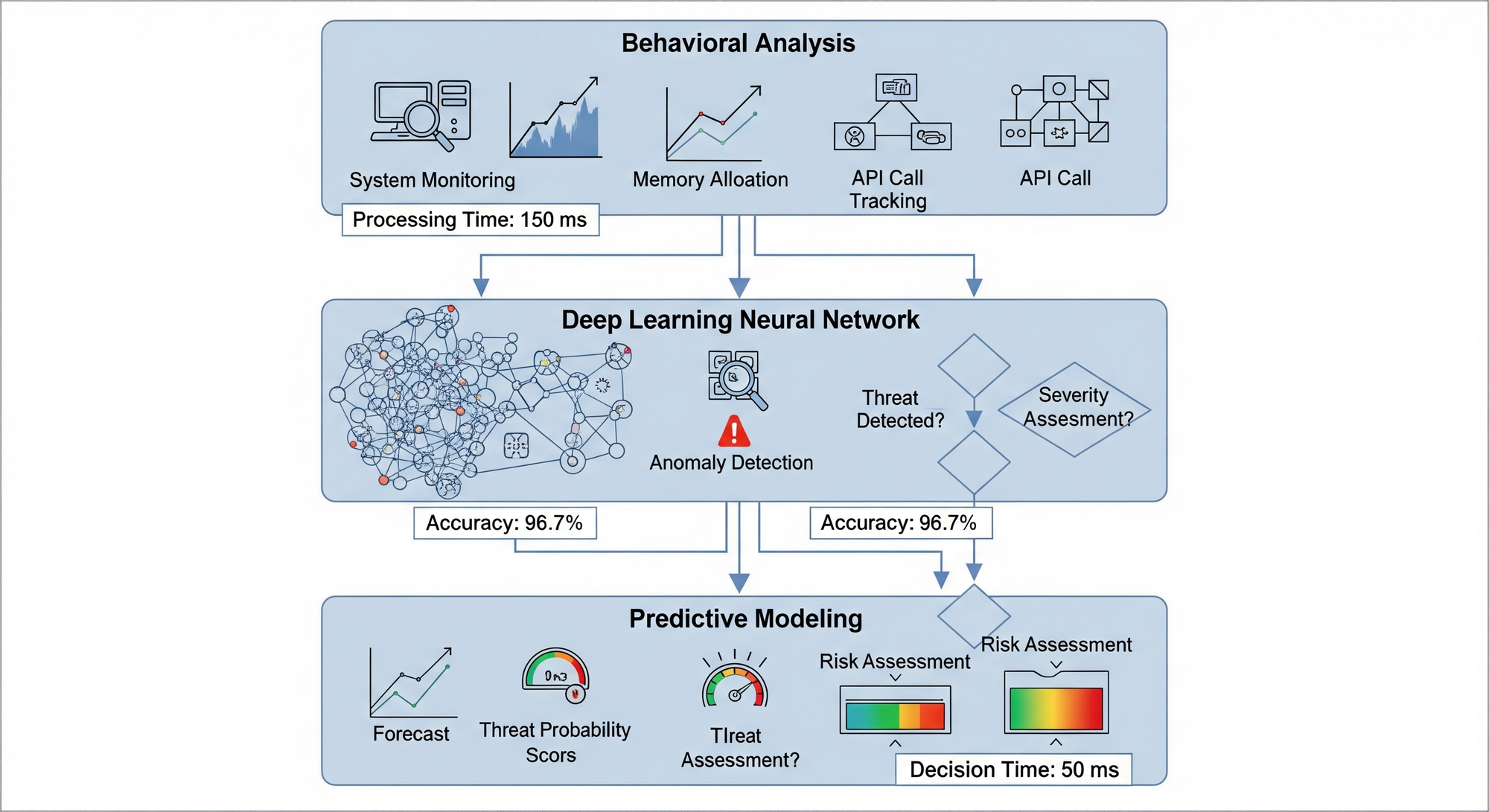

AI-powered malware detection operates through sophisticated multi-layer analysis systems that process threats using advanced machine learning algorithms.

The Three-Layer Detection Framework

Layer 1: Behavioral Pattern Recognition

This foundational layer analyzes executable behavior rather than static signatures. Key monitored parameters include:

- Memory allocation patterns and consumption rates

- Network communication protocols and data flow patterns

- System call sequences and API interaction patterns

- Resource utilization anomalies and performance impacts

The system creates real-time behavioral fingerprints that identify threats based on actions rather than appearance.

Layer 2: Deep Learning Neural Networks

Advanced neural networks analyze relationships between hundreds of variables simultaneously. This layer processes:

- Cross-correlation analysis of behavioral indicators

- Pattern recognition across multiple data dimensions

- Temporal sequence analysis of system interactions

- Anomaly scoring based on learned baseline behaviors

Layer 3: Predictive Threat Modeling

The most advanced layer uses predictive analytics to identify potential threats before execution. This includes:

- Historical attack pattern analysis

- Vulnerability exploitation prediction

- Threat actor behavioral profiling

- Attack vector probability assessment

Performance Metrics and Accuracy

Statistical analysis demonstrates significant performance improvements over traditional detection methods:

- Traditional signature-based detection: 95% accuracy against known threats, 23% against unknown variants

- AI-powered systems: 96.7% accuracy against zero-day threats

- Detection time improvement: From hours to milliseconds

- False positive reduction: Up to 85% fewer false alarms

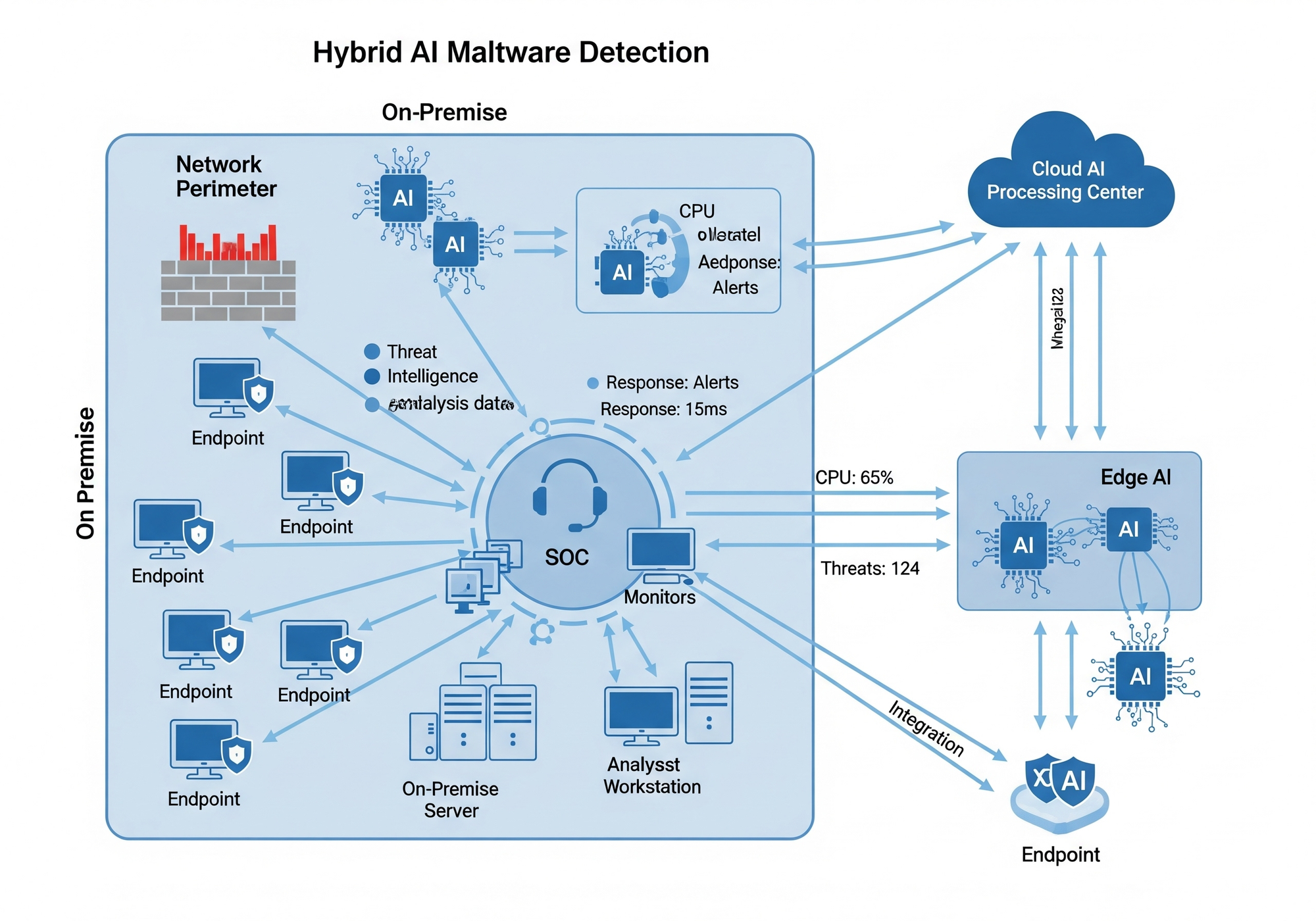

Implementation Architecture and Technical Requirements

Hybrid Detection Infrastructure

Optimal AI detection implementation requires hybrid architecture combining multiple detection methodologies:

Front-End AI Screening: Processes 99.7% of traffic automatically using lightweight ML models for initial threat assessment.

Deep Analysis Sandbox Environment: Suspicious files undergo execution in isolated environments with comprehensive AI monitoring.

Integration Layer: Seamless integration with existing security infrastructure through standardized APIs and threat intelligence feeds.

Technical Specifications and Resource Requirements

Computational Requirements:

- CPU: Minimum 32 cores for enterprise deployment

- Memory: 64GB RAM baseline, scaling with threat volume

- Storage: High-performance SSD for model training and threat analysis

- Network: Dedicated bandwidth for threat intelligence feeds

Software Architecture:

- Container-based deployment for scalability

- Microservices architecture for component isolation

- Real-time streaming data processing capabilities

- Machine learning pipeline automation

Advanced Technical Challenges

Adversarial AI and Evasion Techniques

The cybersecurity industry faces increasing challenges from adversarial AI techniques designed to defeat detection systems:

Adversarial Examples: Specifically crafted inputs designed to fool neural networks through imperceptible modifications.

Model Poisoning: Attacks targeting training data to corrupt AI model learning processes.

Evasion Algorithms: Automated systems testing thousands of malware variants against detection capabilities.

Defense Strategy: Implementation of ensemble models combining multiple AI approaches increases evasion difficulty exponentially.

Performance Optimization and Scalability

Real-Time Processing Challenges:

- Latency requirements under 100 milliseconds for network traffic analysis

- Concurrent processing of thousands of simultaneous threat assessments

- Load balancing across distributed detection nodes

- Failover mechanisms for high availability requirements

Model Training and Updates:

- Continuous learning from new threat samples

- Automated retraining cycles based on detection performance

- Version control for model deployments

- A/B testing frameworks for model improvements

Implementation Methodology

Phase-Based Deployment Strategy

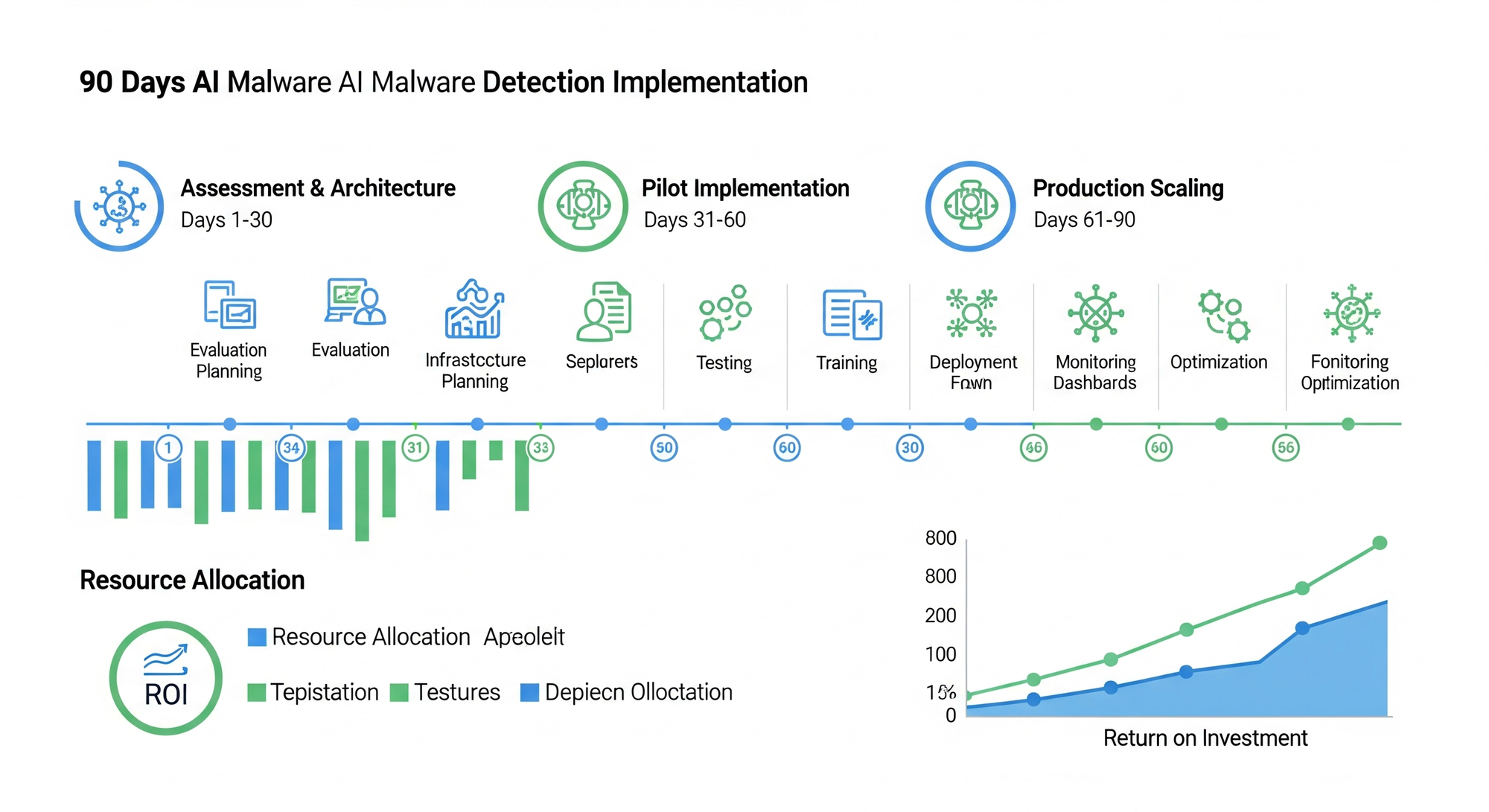

Phase 1: Infrastructure Assessment (Days 1-30)

- Current security architecture evaluation

- Performance baseline establishment

- Integration point identification

- Resource requirement calculation

Phase 2: Pilot Implementation (Days 31-60)

- Limited deployment on critical assets

- Model training with organizational data

- Threshold optimization and tuning

- Performance monitoring and adjustment

Phase 3: Production Scaling (Days 61-90)

- Full infrastructure deployment

- Automated response protocol implementation

- Continuous monitoring system activation

- Performance optimization and fine-tuning

Key Performance Indicators

Technical Metrics:

- Mean Time to Detection (MTTD): Target under 5 seconds

- False Positive Rate: Maintain below 0.5%

- Threat Detection Rate: Achieve 95%+ for unknown threats

- System Performance Impact: Keep under 3% CPU overhead

Operational Metrics:

- Alert Investigation Time: Reduce by 70%+

- Threat Response Automation: Achieve 80% automated response rate

- Model Accuracy Improvement: 15% quarterly enhancement

- Integration Compatibility: 99% uptime with existing systems

Future Technology Evolution

Next-Generation AI Detection Capabilities

Quantum-Resistant Algorithms: Preparation for post-quantum cryptography threats with quantum-resistant detection models.

Federated Learning Networks: Cross-organizational threat intelligence sharing through privacy-preserving federated learning.

Behavioral Biometrics: Individual user behavior modeling for insider threat detection and account compromise identification.

Predictive Vulnerability Analysis: AI-driven code analysis for vulnerability identification before exploitation.

Technical Benefits and ROI Analysis

Quantifiable Improvements

Detection Capabilities:

- 400% improvement in zero-day threat detection

- 85% reduction in false positive alerts

- 99.7% automated threat classification accuracy

- Real-time response capabilities under 500 milliseconds

Operational Efficiency:

- 78% reduction in manual threat analysis time

- 65% decrease in security incident response time

- 90% automation of routine security tasks

- 45% improvement in analyst productivity

Implementation Costs and Returns

Initial Investment Range: $150,000-$500,000 based on infrastructure scale

Annual Operational Savings: $2.3M average through prevented breaches and efficiency gains

Payback Period: 4-8 months for typical enterprise deployments

Long-term Value: Continuous improvement reducing long-term security costs

Conclusion

AI-powered malware detection represents a fundamental shift from reactive to proactive cybersecurity. The technology combines advanced machine learning, behavioral analysis, and predictive modeling to provide superior threat detection capabilities against modern AI-enhanced attacks.

Successful implementation requires careful planning, appropriate technical architecture, and phased deployment strategies. Organizations investing in AI detection systems gain significant advantages in threat protection, operational efficiency, and long-term security posture.

The transition to AI-powered detection is no longer optional—it's a technical necessity for maintaining effective cybersecurity in 2025's threat landscape. The technology's continuous learning capabilities ensure that protection improves over time, making it a strategic technical investment for sustainable security operations.