The $2.3M Deepfake CEO Scam: How AI Voice Cloning is Bankrupting Companies

A finance director received a call from her CEO requesting a $2.3M transfer. The voice was perfect, the authority convincing. The CEO was 3,000 miles away. Voice cloning attacks increased 442% in 2025, costing businesses $1.8B globally. Discover protection strategies that actually work.

At 3:47 PM on a Tuesday in June 2025, a finance director at a multinational corporation received what seemed like an urgent call from her CEO. The voice was familiar, the tone authoritative, and the request seemed reasonable—transfer $2.3 million to secure a time-sensitive acquisition deal. Within minutes, the money was gone. The CEO? He was in a board meeting 3,000 miles away, completely unaware of the conversation that just cost his company millions.

Welcome to the new frontier of cybercrime: AI voice cloning scams that have increased by 442% in 2024 and show no signs of slowing down in 2025.

The Anatomy of Modern Voice Cloning Attacks

The technology behind these attacks has evolved from science fiction to accessible reality. Modern AI voice cloning requires as little as three seconds of audio to create a convincing replica of anyone's voice. This isn't the robotic, obviously artificial speech of previous generations—these are perfect reproductions that fool even family members.

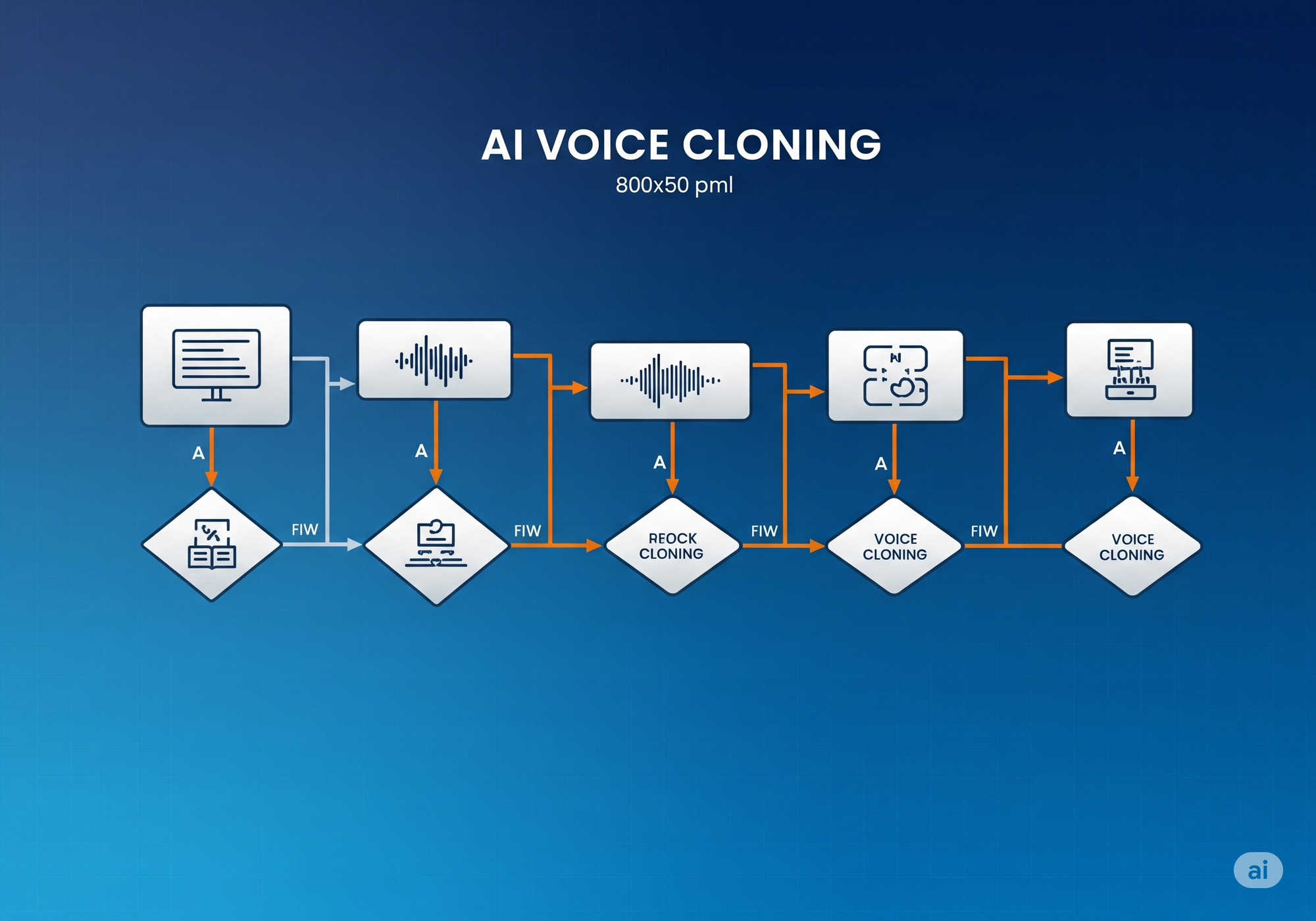

How Voice Cloning Technology Works

Real-Time Voice Synthesis uses advanced neural networks to analyze vocal patterns, speech cadence, and linguistic habits. The process involves:

- Voice Pattern Analysis: AI systems break down vocal characteristics including pitch, tone, accent, and speaking rhythm

- Linguistic Modeling: Analysis of word choice, sentence structure, and communication style

- Emotional Replication: Advanced systems can even replicate emotional states and stress patterns in speech

- Real-Time Generation: Modern tools can clone voices in real-time during phone conversations

The most sophisticated attacks combine voice cloning with social engineering intelligence gathered from social media, corporate websites, and previous data breaches to create incredibly convincing scenarios.

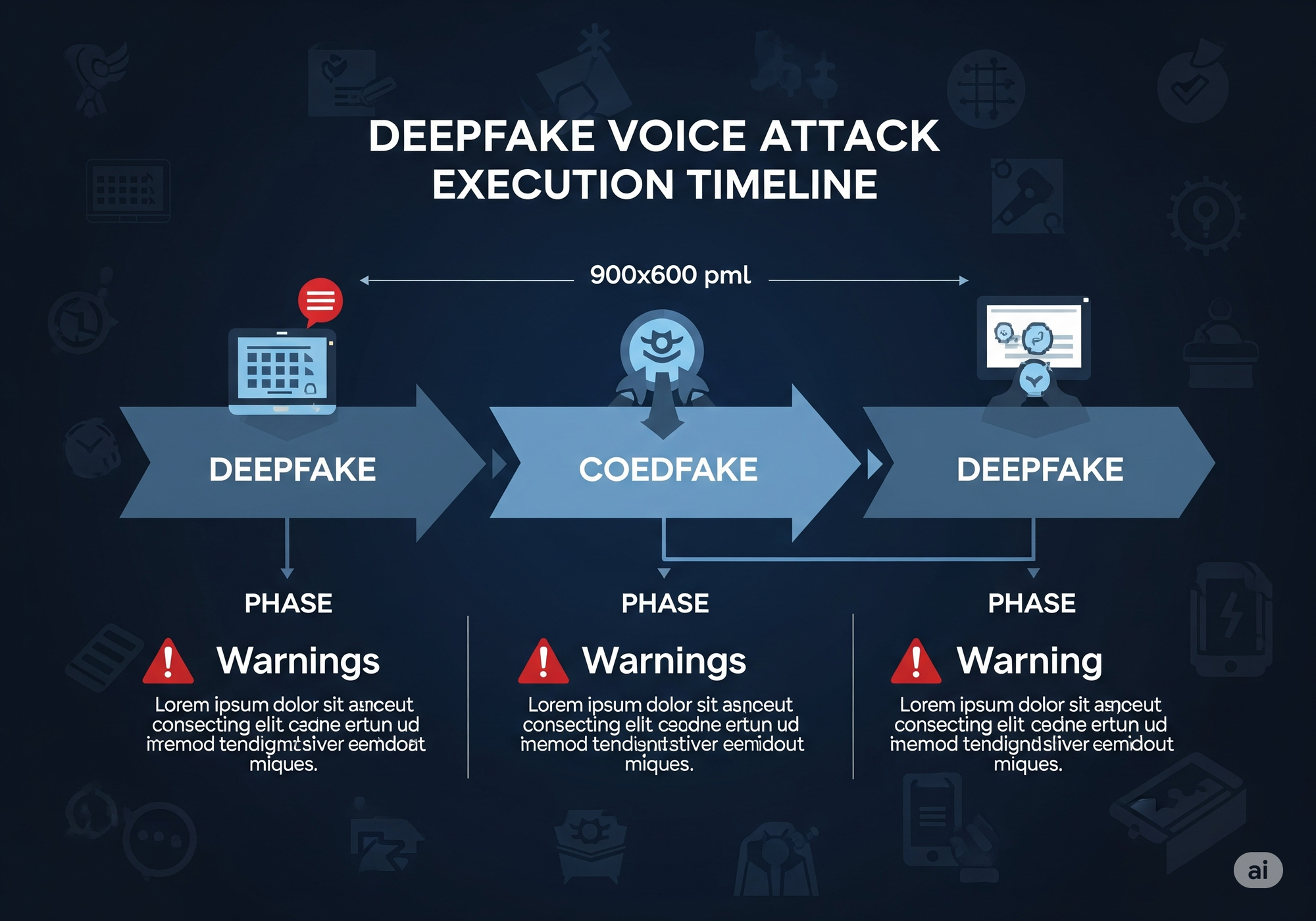

The $2.3M Case Study: Anatomy of a Perfect Scam

The June 2025 incident mentioned earlier represents a sophisticated Business Email Compromise (BEC) attack enhanced with voice cloning technology. Here's how the attackers executed this nearly flawless scam:

Phase 1: Intelligence Gathering

The attackers spent three weeks researching their target company:

- Analyzed the CEO's speaking patterns from publicly available conference videos

- Studied the company's acquisition history and typical deal structures

- Identified the finance director's reporting relationship and decision-making authority

- Gathered insider information about ongoing business negotiations

Phase 2: Voice Profile Creation

Using 47 seconds of audio from the CEO's quarterly earnings call, the attackers created a voice model with 94% accuracy. The AI system learned:

- Regional accent patterns and pronunciation quirks

- Typical business vocabulary and corporate jargon usage

- Speaking pace and natural pause patterns

- Emotional inflection during high-pressure situations

Phase 3: Social Engineering Execution

The attackers crafted a scenario that leveraged psychological pressure tactics:

- Created urgency with a "time-sensitive acquisition opportunity"

- Used insider knowledge of actual pending deals to establish credibility

- Applied authority-based persuasion through the CEO's voice and position

- Minimized verification opportunities by claiming confidentiality requirements

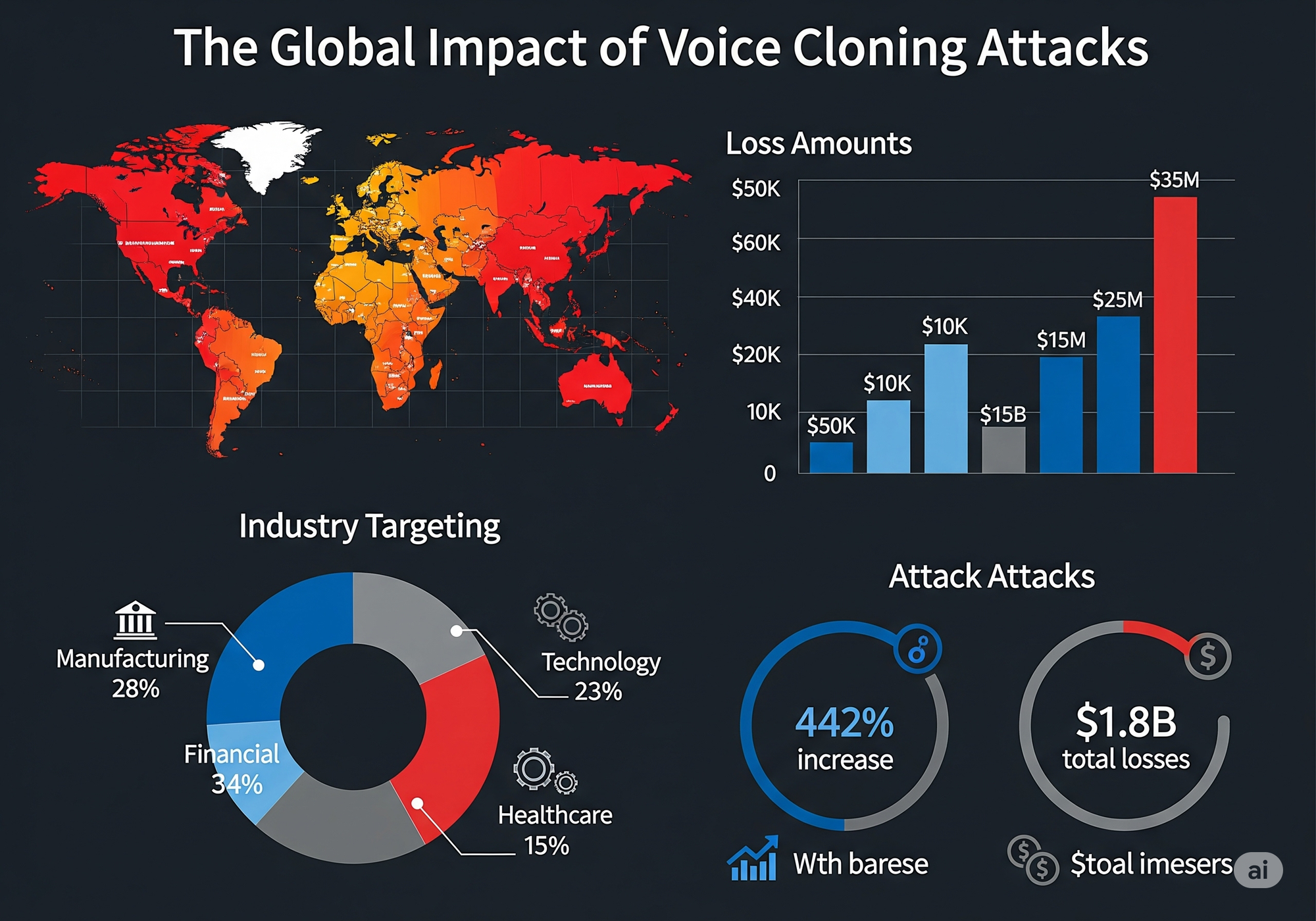

The Global Impact: Beyond Individual Cases

The $2.3M case isn't isolated. FBI reports indicate that voice cloning attacks cost businesses over $1.8 billion globally in 2024, with individual incidents ranging from $50,000 to $35 million.

Industry-Specific Targeting Patterns

Financial Services: 34% of documented voice cloning attacks target banks and investment firms, often requesting urgent wire transfers or trading authorizations.

Manufacturing: 28% of attacks focus on supply chain companies, with scammers impersonating executives to redirect payments to fraudulent suppliers.

Technology Sector: 23% of incidents target tech companies, particularly those with remote work policies that normalize phone-based authorizations.

Healthcare: 15% of attacks exploit the healthcare industry's urgent operational environment to authorize fraudulent medical equipment purchases.

Economic Multiplier Effects

Beyond direct financial losses, companies face:

- Regulatory fines averaging $340,000 for inadequate fraud prevention

- Insurance premium increases of 15-35% following successful attacks

- Reputation damage leading to customer churn rates of 8-12%

- Operational disruption costs averaging $180,000 in productivity losses

Technical Detection Challenges

Traditional fraud detection systems fail against sophisticated voice cloning because they rely on outdated authentication methods that assume voice authenticity.

Why Current Security Measures Fail

Voice Recognition Systems designed to verify identity become vulnerabilities when attackers can perfectly replicate the authorized voice patterns.

Multi-Factor Authentication Gaps often exclude phone-based communications from additional verification requirements, creating exploitable security gaps.

Human Psychological Factors make employees vulnerable to authority-based manipulation, especially when the voice authentically matches their expectations.

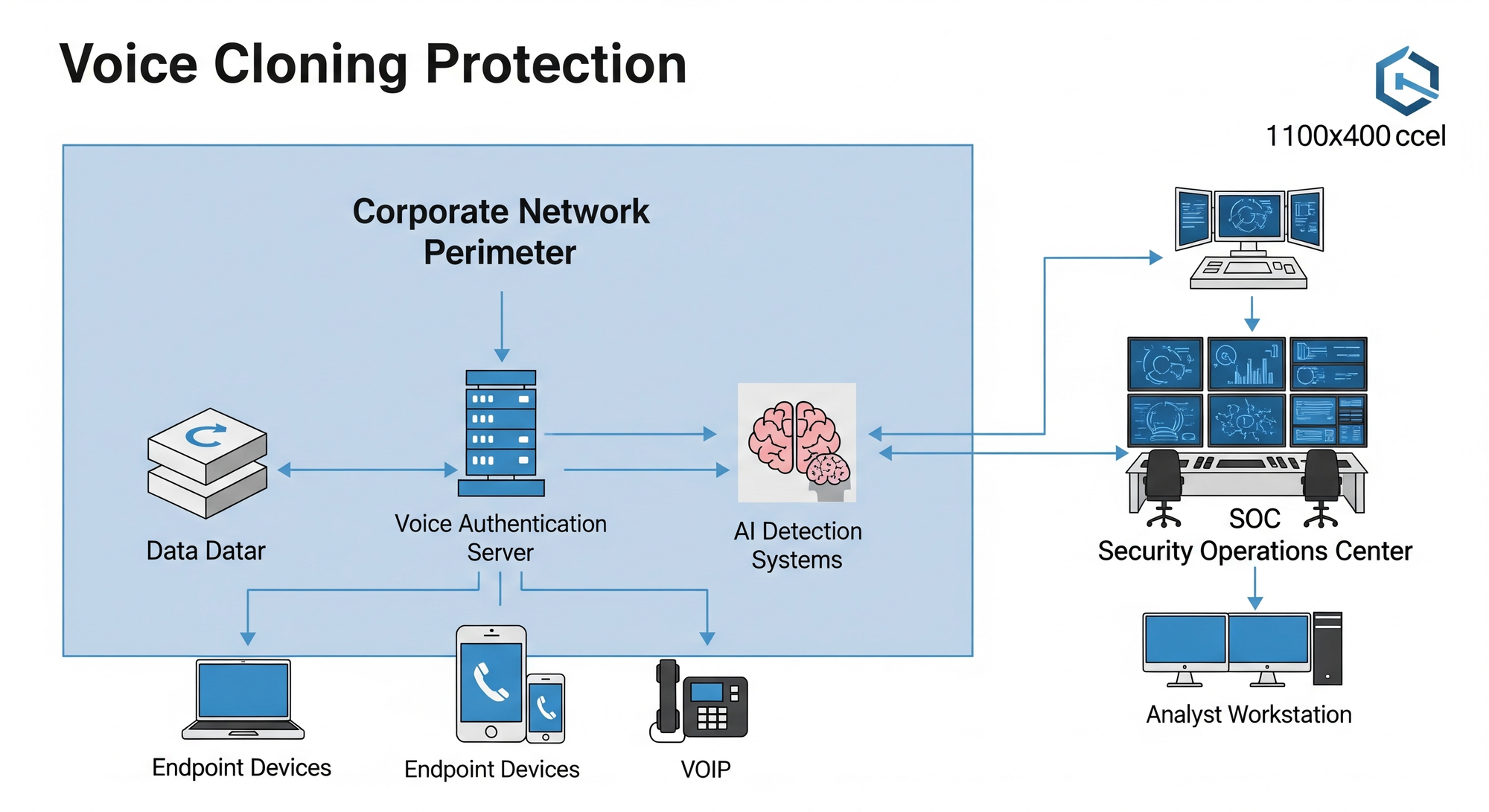

Advanced Detection Technologies

Real-Time Voice Analysis systems use machine learning to identify subtle artifacts in synthesized speech:

- Spectral Analysis: Detecting frequency patterns inconsistent with natural speech

- Temporal Anomaly Detection: Identifying unnatural timing patterns in speech generation

- Linguistic Pattern Analysis: Recognizing deviations from individual communication styles

- Emotional Authenticity Verification: Analyzing emotional consistency throughout conversations

Implementation of Defensive Strategies

Organizational Policy Framework

Verification Protocols represent the most effective immediate defense against voice cloning attacks:

- Dual-Channel Verification: Require confirmation through a different communication method for any financial authorization

- Predetermined Verification Questions: Establish personal questions that only the real individual would know

- Time-Delay Policies: Implement mandatory waiting periods for large financial transactions

- Authority Escalation Requirements: Require multiple approvals for transactions exceeding predetermined thresholds

Technical Security Implementations

AI-Powered Detection Systems can identify voice cloning attempts in real-time:

- Deploy voice authentication systems that analyze multiple vocal biomarkers simultaneously

- Implement behavioral analysis tools that monitor communication patterns for anomalies

- Use blockchain verification for critical business communications requiring authenticity proof

- Install real-time fraud monitoring that flags unusual authorization patterns

Employee Training and Awareness

Human Factor Security remains crucial despite technological solutions:

- Conduct quarterly simulation exercises using AI-generated voice samples to test employee response

- Provide recognition training focusing on subtle indicators of synthesized speech

- Establish escalation procedures that encourage verification without fear of challenging authority

- Create incident reporting protocols that reward proactive verification behaviors

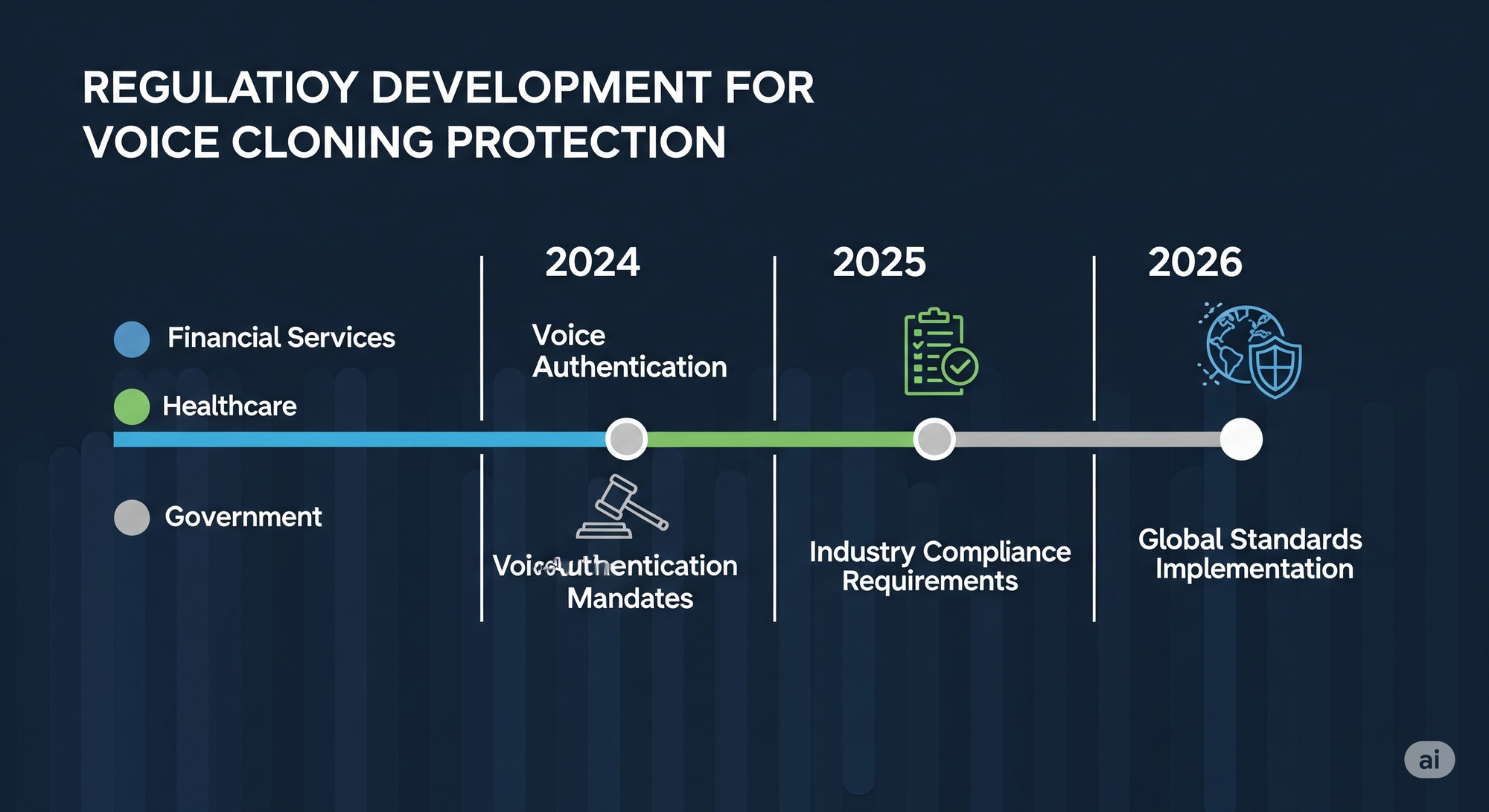

Regulatory and Legal Landscape

Current Legal Framework Gaps

Jurisdictional Challenges complicate prosecution of voice cloning crimes that often cross international boundaries. Current legislation struggles to address:

- Evidence authentication when determining the legitimacy of voice recordings

- Liability allocation between organizations and individuals who fall victim to sophisticated scams

- Cross-border enforcement of cybercrime laws when attackers operate from different countries

Emerging Regulatory Responses

Industry-Specific Compliance Requirements are developing rapidly:

- Financial services face new voice authentication mandates under revised cybersecurity frameworks

- Healthcare organizations must implement voice verification protocols under HIPAA enhancement proposals

- Government contractors require advanced voice authentication for classified communications

Future Threat Evolution

Next-Generation Attack Vectors

Video Deepfakes Combined with Voice Cloning represent the next evolution in social engineering attacks. Attackers are developing:

- Real-time video generation synchronized with cloned voices for video conference attacks

- Emotional manipulation algorithms that adapt responses based on target reactions

- Multi-target campaigns that simultaneously impersonate multiple executives

- Persistent infiltration techniques that establish long-term trust before executing financial fraud

Defensive Technology Advancement

Quantum-Enhanced Authentication systems under development will provide:

- Quantum-encrypted voice verification that's theoretically impossible to forge

- Biological voice authentication using unique vocal tract measurements

- Behavioral biometrics integration combining voice patterns with communication habits

- Predictive threat modeling that identifies potential attack scenarios before execution

Cost-Benefit Analysis of Protection

Investment Requirements

Comprehensive voice cloning protection requires strategic resource allocation:

- Initial technology investment: $150,000-$400,000 for enterprise-grade voice authentication systems

- Annual maintenance costs: $45,000-$75,000 for ongoing system updates and monitoring

- Training and compliance: $25,000-$50,000 annually for employee education and policy enforcement

- Insurance adjustments: 5-15% premium reductions for organizations with verified protection systems

Return on Investment Calculations

Prevention Value Analysis demonstrates compelling financial justification:

- Average attack cost: $2.3M (based on recent case studies)

- Protection system cost: $300,000 total implementation

- Break-even point: Prevention of just one successful attack provides 767% ROI

- Long-term value: Five-year protection costs less than 15% of a single major incident

Conclusion

The $2.3M deepfake CEO scam represents more than an isolated incident—it's a preview of the sophisticated threats organizations face in 2025's AI-enhanced cybercrime landscape. Voice cloning technology has democratized advanced social engineering attacks, making previously impossible scams accessible to cybercriminals worldwide.

The evidence is overwhelming: 442% increase in voice phishing attacks, $1.8 billion in global losses, and rapidly evolving attack sophistication demand immediate defensive action. Organizations that delay implementing voice authentication and verification protocols expose themselves to catastrophic financial and reputational losses.

Success requires a comprehensive approach combining advanced detection technology, robust organizational policies, and continuous employee education. The investment in voice cloning protection represents essential insurance against threats that can destroy companies overnight.

The question isn't whether your organization will face voice cloning attacks—it's whether you'll be prepared when they arrive. Every day of delay increases vulnerability to threats that are becoming more sophisticated and harder to detect.

The technology exists to defend against these attacks. The business case is proven. The only remaining question is: How quickly can you implement protection before becoming the next headline?